Unlocking the Power of Large Language Models: What You Need to Know About LLMs

What is a large language model?

A Large Language Model, by its name, is a sophisticated AI that’s expected to assume the roles of processing and producing human language. Large Language Models undergo astronomical amounts of data-to which billions of words, phrases, and sentences from diverse sources are used to teach them to understand context, syntax, semantics, or even subtle nuances in the use of the human language.

What is a large language model?

Among the most important subsets of NLP, large language models have been one of the most important because they deliver coherent and contextually accurate answers. Among the many applications of large language models, one has particularly been through translation and text generation in chatbots and more customer service automation services. Some of the most renowned models include the likes of ChatGPT, GPT-3, BERT, and many others.

Large Language Model Vs. Generative AI

Even though large language models and generative AI fall under the wide category of artificial intelligence, they differ from one another. In an entirely different context altogether, any system that can create new content ranging from images to audio on training data, for example, can be described as generative AI. Large language models are a particular type of generative AI but particularly with the intent of producing as well as a requirement to process text-based information.

Large multimodal language model

A multimodal large language model can process more than one kind of data input, including texts, images, or audio. Already trained to be sensitive to the relationships between different kinds of data, this opens doors for much more interactive and responsive applications of AI that will be ready to ask questions based on both textual and visual inputs.

Examples of Large Language Models

Some of the most popular large language models include:

Open AI’s ChatGPT is the only conversational AI which can answer questions, generate text, and even have a chat with you.

GPT-3:

“It was known for many things, mostly versatility in writing content, summarizing or answering queries.”

BERT by Google:

This is best designed specifically to understand the context of words in a sentence, is much better at question-answer and inferring language.

Is ChatGPT a Large Language Model?

Yes. ChatGPT is one of the large language models developed by OpenAI. It uses very gigantic datasets with a set of sophisticated algorithms so that answers and question-related information can be provided by mimicking a conversation almost like that of a human.

Define Large Language Model

A large language model is an artificial intelligence model designed to understand and generate the elements of natural language through processing vast amounts of data. This is achieved with the help of deep learning architectures, which enable it to differentiate and predict the structure and meaning behind language. It can even do such tasks as text generation, language translation, and conversation.

Fine-Tuning a Large Language Model

Fine-tuning refers to the process of getting a large language model to work on a particular task or industry-specific adaptation by further training it with a more targeted dataset. This then enhances accuracy and relevance in the applications requiring specific knowledge or terminology.

Large Language Model Book and Resources

To get familiar with the complexity of LLMs, several authors advise reading books discussing architectures of large language models, their training methods, and AI ethics. These resources are very valuable for beginners, even for advanced practitioners with some curiosity in NLP and AI.

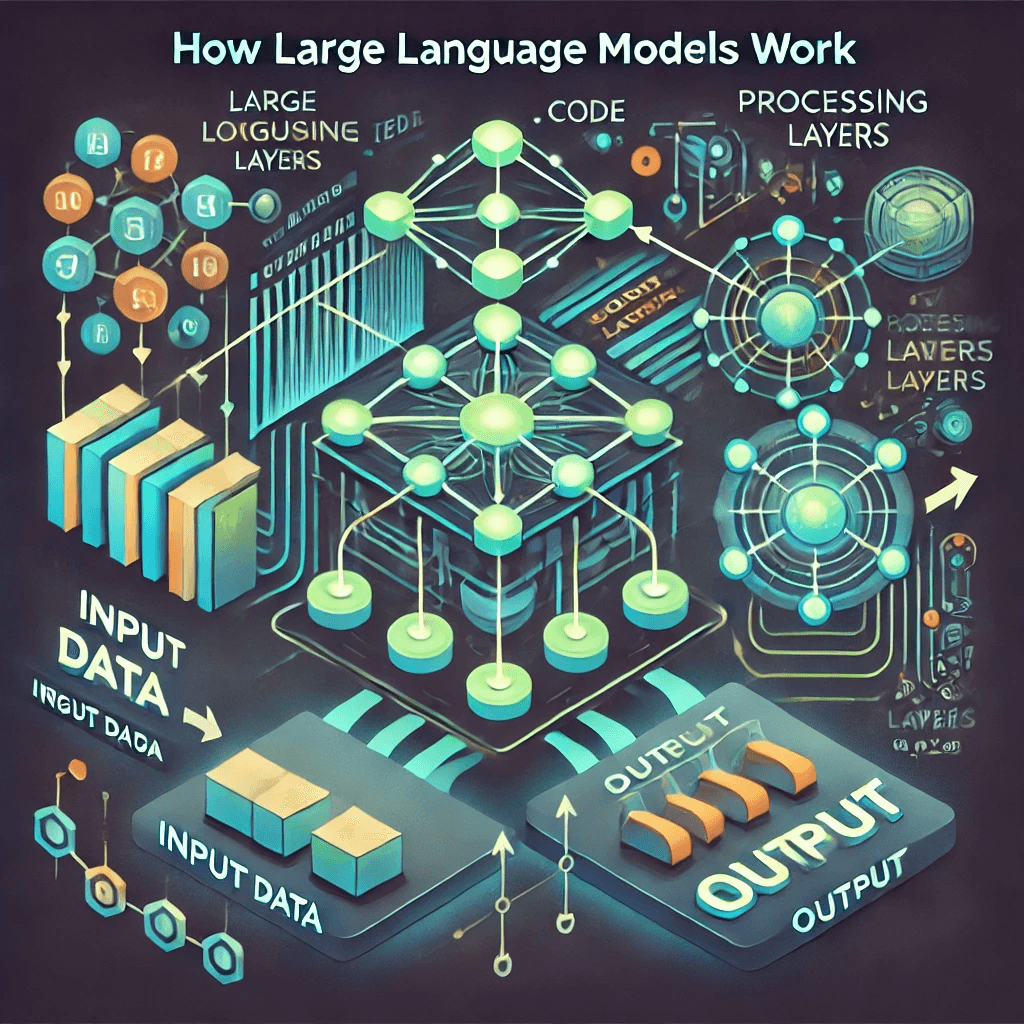

Large Language Model Architecture

A large language model typically takes the form of a multi-layered neural network, based on the transformer models. This architecture of LLMs enables processing large volumes of text data in parallel, thus enhancing their already high efficiency with language tasks.

Training Large Language Models

Simply, training is feeding a large data set to the large language model with diverse types of linguistic inputs. It can be supervised or unsupervised learning by which the pattern and association between words, phrases, and sentences are learned. Training these models may take weeks or even months depending on the size and complexity of the model developed.

Conclusion

It is from the latest large language models to the extreme changes that they have undergone in their AI and NLP world by introducing a new avenue for machines to express their understanding of the human language. But as the models grow, so do the improvements in precision and adaptability – and have proven invaluable in an enormous range of industries.